QA bot using ChatGpt

ChatGpt is the AI sensation of the year. Like everyone else, I was curious to try using it to simplify day to day activities. Here’s a simple project I did in R, to collect data and answer as many questions from Oracle Forums, using ChatGpt.

Project

Answer as many questions in selected communities from Oracle Forums

Oracle Forums – User Stats

Solution

ChatGpt limitations –

Before discussing the solution in detail, we need to keep in mind that ChatGpt has some limitations –

- Hallucination – may generate a completely made up response, which is irrelevant/incorrect

- We can send only 3 questions per minute, using OpenAI rest API call

- Model used “Chat-gpt-3.5”, which is freely available solution from OpenAI, at the time of writing this article

- ChatGpt 3.5 – is trained till Sep 2021 – So, it knows about Oracle tech stack/releases till Sep 2021

R Limitations

Memory limits and size – these are specific to the machine and the R version installed on that machine

Oracle Forums Limitations

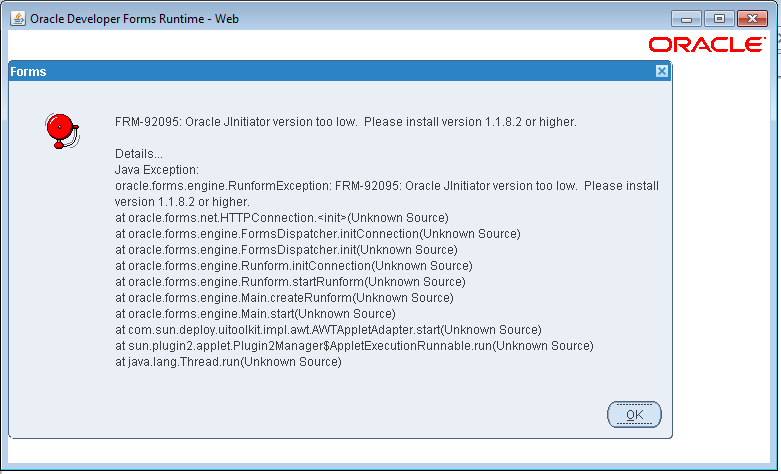

Since OTN has been recently migrated to Oracle APEX cloud, page errors and most of the functionality is error-prone, resulting in unexpected page errors while loading even manually.

Data Collection

Select topics you are familiar with, to verify and judge whether ChatGpt response is correct or not.

Collected 50 html pages manually, related to topics – Oracle BI Publisher, OBIEE, SQL, REST API, OAUTH, Integration, ML, AI.

Tried automatic data collection, using RSelenium and R; but in vain. Automating this task was tedious and time-consuming and also Oracle Forums were recently migrated to Oracle APEX, this migration process caused a lot of downtime, irrational/unexpected/unavailable functionality in most of Oracle Forums threads.

Data Preparation

Select questions/posts; wisely based on the post date, post tags. Skim through collected data and remove posts related to latest versions, released after Sep 2021

Prerequisites to use ChatGpt 3.5

Create a user account in OpenAI.com, one can log in using Google/Apple/Microsoft accounts

Generate OpenAI Key or token – Go to https://platform.openai.com/account/api-keys, to create a new secret key

Save this newly generated secret key, this is required for authentication for each API call we are about to make

Install libraries, (rvest, httr, jsonlite) to make REST API calls

Running Code

Code is uploaded to github. You can see code snippets here:

library(httr)

library(rvest)

#library(tidyr)

#library(xml2)

library(jsonlite)

library (RSelenium)

#setwd(dir = “/Users/arunamalla/Documents/GitHub/arunamalla.github.io/_posts/_site/Chatgpt_ora_forums/Data”)

OPENAI_API_KEY = “<

# read the file urls_txt.txt and feed this to chatgpt 3.5 using an API KEY

prompt <- “Write a simple linear regression program in R” #text prompt

make_req_gpt <- function(prompt){

headers <- c(

‘Content-Type’ = ‘application/json’,

‘Authorization’ = paste0(‘Bearer ‘, OPENAI_API_KEY)

)

data <- list(

‘model’ = ‘gpt-3.5-turbo’,

‘messages’ = list(

list(‘role’ = ‘system’, ‘content’ = ‘You are a helpful assistant.’),

list(‘role’ = ‘user’, ‘content’ = prompt)

),

‘temperature’ = 0.0

)

json_data <- toJSON(data, auto_unbox = TRUE)

response <- POST(

url = ‘https://api.openai.com/v1/chat/completions’,

body = json_data,

add_headers(.headers = headers),

encode = “json”

)

return (response)

}

Client Code

Load our custom library with make_req_gpt function from the collect_chatgpt-.R file, reads and preps the data from all .html pages we scraped from Oracle Forums.

In addition, parallel processing is implemented to make the R code and Overall solution a tad bit faster.

# read each html page in Data folder and retreive post URLS from them

# get the post details for each URL and store it in a data frame

# feed these post details to chatgpt

library(readr)

library(dplyr)

library(httr)

library(rvest)

library(jsonlite)

library(foreach)

source(“/Users/arunamalla/Documents/GitHub/Bgit/R Packages/collect_chatpgt-.R”)

# set working directory

#setwd(dir = “/Users/arunamalla/Documents/GitHub/arunamalla.github.io/_posts/_site/Chatgpt_ora_forums/Data”)

pgurls_df <- data.frame (“url”, “pagenum”)

colnames(pgurls_df) <- c(“url”, “pagenum”)

data.files <- list.files(path = getwd(), pattern = “*.html”, full.names = TRUE)

for (i in 1:length(data.files)) {

read_html (read_file(file = data.files[i])) -> response_body_html

response_body_html %>% html_nodes(“.rw-ListItem-primaryText a”) %>% html_attr(name = “href”) -> pgUrls

pgUrls %>% as.data.frame.character() %>% mutate(pagenum=i) -> pgUrls

colnames(pgUrls) <- c(“url”, “pagenum”)

merge.data.frame (pgurls_df, pgUrls, by = intersect(names(pgurls_df), names(pgUrls)), all.x = TRUE, all.y = TRUE) -> pgurls_df

}

# remove unused objects from memory

rm(list=c(“pgUrls”, “response_body_html”, “i”))

# Load required libraries

library(foreach)

library(iterators)

library(doParallel)

# Create a cluster

makeCluster(2) -> cl1

registerDoParallel(cl = cl1)

# Define the run_parallel function

run_parallel <- function(i, df) {

df$url <- df_unique[i, “url”]

as.character(df_unique[i, “url”]) -> gurl

df$url <- gurl

gurl_data <- httr::GET(gurl, user_agent(“Mozilla/5.0”))

gurl_data_html <- content(gurl_data, as = “text”, encoding = “UTF-8”) %>% read_html()

qatitle <- gurl_data_html %>% html_nodes(“.ds-Question-text .ds-Question-title”) %>% html_text()

qaattrs <- gurl_data_html %>% html_nodes(“.ds-Question-text .ds-Question-attrs”) %>% html_text2()

qabody <- gurl_data_html %>% html_nodes(“.ds-Question-text .ds-Question-body”) %>% html_text()

qalock <- gurl_data_html %>% html_nodes(“.ds-ContentRowReport-itemDescription”) %>% html_text()

if(length(qalock)>0) {

df$qa_lock <- as.character(qalock[1])

}

else {

df$qalock <- as.character(“NA”)

if (length(qatitle) > 0) df$qa_title <- as.character(qatitle)

if (length(qaattrs) > 0) df$qa_attrs <- as.character(qaattrs)

if (length(qabody) > 0) df$qa_body <- as.character(qabody)

}

tryCatch({

if (length(qabody) > 0) {

gpt_prompt <- paste0(“Question title:\n”, df$qa_title, “\nquestion details:”, df$qa_body)

gpt_response <- make_req_gpt(gpt_prompt)

if(gpt_response$status_code != 200){

warning(paste0(“Request completed with error. Code: “, gpt_response$status_code

, “, message: “, gpt_response$error$message))

df$error = gpt_response$error$message

df$message = gpt_response$error$message

df$status_code = gpt_response$status_code

print(“error occurred – sleeping for 20 sec”)

Sys.sleep(time=20)

}

else {

response_content <- parse_json(content(gpt_response, “text”, encoding = “UTF-8”))$choices[[1]]$message$content

df$prompt_content = gpt_prompt

df$chatgpt_response = response_content

}

# print(paste0(“gpt response – ” , response_content))

Sys.sleep(time=20)

}

},

error = function(e) {

print(e)},

warning = function(w) {

print(w)}

, finally = print(paste0(“processed url – “, i)))

return(df)

}

# Create an empty core_output object

core_output <- list(url = character(), qa_title = character(), qa_attrs = character(), qa_body = character())

# Run the parallel loop and retrieve the updated core_output

system.time({

df1 <- foreach(j = 851:950, .combine = ‘rbind’, .init = core_output) %do% {

run_parallel(j, core_output)

}

})

# Run the parallel loop, call the chatgpt api, and retrieve the updated core_output

system.time({

df1 <- foreach(j = 1:1069, .combine = ‘rbind’, .init = core_output) %do% {

run_parallel(j, core_output)

}

})

# Stop the cluster

stopCluster(cl1)

Post responses in Oracle Forums

Manually verify each question content and the ChatGpt response, verify whether the responses are authentic and correct, align with Oracle Forums policies and guidelines.

Post responses as comments in each question, manually

Further improvements

Data Collection/Scraping – can be automated using RSelenium and R, GeckoDrivers

Provided Oracle Forums latest migration to Oracle APEX cloud is more stabilized and causes no issues while accessing data using web drivers

Data Preparation

Set and store information such as Topics, corresponding Community thread URL, the latest release info effective on or before Sep 2021

Pre-process post title and post content, to decide whether it’s related to any of our input topics/releases set in point #2.1

Posting responses in Oracle Forums

Can be automated. However, verifying the response is correct or not should be done manually

Conclusion

Although one can experience a significant improvement in productivity and by solving issues at a faster pace; one cannot avoid manual intervention in terms of verifying the generated response, and also whether the suggested solution is working or not. This demands a certain level of familiarity with the topics being requested. In my case, it’s OBIEE, Oracle BI Publisher, R, Python, ML, AI, SQL, etc.